The CISO's GDPR Playbook for AI: A Guide to SaaS LLM Compliance

The CISO’s New Nightmare: Your LLM Just Leaked Customer Data

The SaaS company is growing fast. The product team just shipped an AI assistant that helps users draft emails, summarize reports, and search across their account data using a large language model (LLM). Customers love it. The investors are bullish. The CISO, however, feels a familiar knot of dread.

Two weeks later, the Data Protection Officer (DPO) walks into the room with a printout from a test environment:

A QA engineer, trying to test a new feature, asked the AI in staging: “Show me a realistic customer ticket with real names and card details so I can test the sentiment feature.”

The model responded with something disturbingly realistic, containing actual names, emails, and partial card numbers. The data had been copied from production to a staging environment to “improve” the AI.

Suddenly, the compliance nightmare is real:

- Personal data was used for training and testing without a clear lawful basis.

- Test data is not properly anonymized or masked, creating a toxic data environment.

- The model can surface sensitive personal identifiable information (PII) in unpredictable ways.

- You cannot easily fulfill a data subject’s “right to be forgotten” because their data is baked into the model.

- Regulators are asking how your shiny new AI feature complies with GDPR.

This scenario is the daily reality for CISOs and compliance managers navigating the collision of generative AI and data protection regulation. You want to innovate, but you must keep regulators, auditors, and enterprise customers confident in your security and privacy posture.

This guide provides a clear, actionable path forward. We will move beyond theoretical discussions and dive into the practical governance, technical controls, and audit preparations needed to build GDPR-compliant AI features, transforming this daunting challenge into a manageable, auditable process using Clarysec’s structured toolkits.

The Processor-Controller Dilemma in an AI World

Before you can protect data, you must understand your role under GDPR. This distinction is not academic; it dictates your legal obligations, contractual requirements, and the controls you must implement.

For most B2B SaaS platforms, the roles are initially clear:

- Your enterprise customer is the PII controller, as they determine the purposes and means of processing personal data.

- You are the PII processor, acting on the documented instructions of your customer.

As ISO/IEC 27018 explains for cloud service providers, this processor role is typical. However, when you introduce an LLM, the lines blur.

- If you use a customer’s data only to provide AI features within their isolated tenant, you likely remain a processor.

- If you aggregate data from multiple customers into a shared training corpus to improve your global model, you may be drifting into controller territory for that specific processing activity. This new purpose requires its own lawful basis and transparency.

- If you send data to a third-party LLM provider, that provider becomes your sub-processor, and you are responsible for their compliance.

Engaging in AI model training often means you are acting as a data controller for that activity, which comes with a host of obligations: establishing a lawful basis, ensuring purpose limitation, and directly managing data subject rights.

This is where a robust governance framework becomes non-negotiable. Clarysec’s Data Protection and Privacy Policy-sme codifies this principle, stating a core objective is to:

“Ensure personal data is handled in accordance with privacy laws and security standards, including GDPR, NIS2, and ISO 27001.”

- From section ‘Objectives’, policy clause 3.1.

This commitment, embedded in your policy stack, sets the stage for building trust and ensuring compliance is not an afterthought.

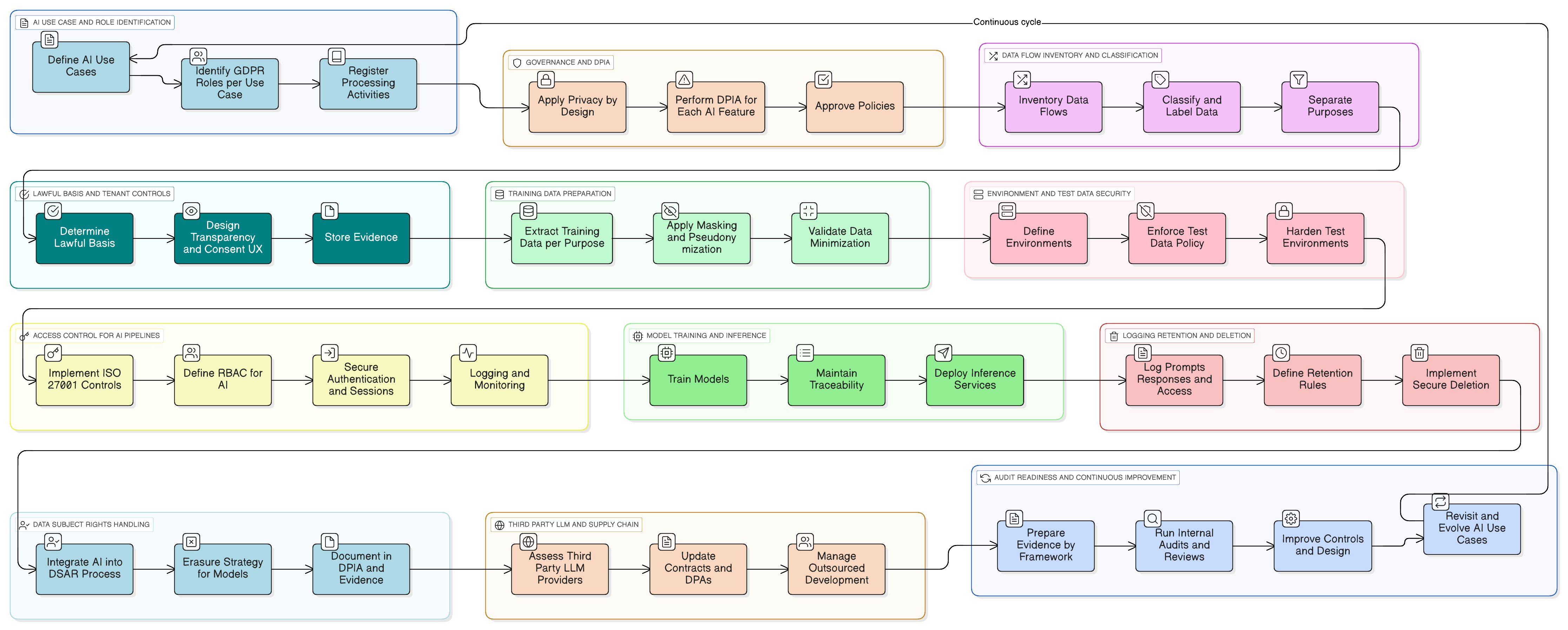

Privacy by Design for LLMs: Building Compliance In, Not On

GDPR’s Article 25 mandates “data protection by design and by default.” This isn’t a suggestion; it’s a legal requirement. For AI systems, this means you must build privacy considerations directly into the architecture of your data pipelines, training environments, and inference engines.

Paraphrasing the guidance in ISO/IEC 27701, this involves several key actions for any SaaS platform developing AI:

- Minimization by Design: Do not send entire records to the LLM if you only need a subset. Redact or mask identifiers before prompts leave your core system.

- Purpose Limitation: Separate “data used to deliver the feature” from “data used to improve the model.” Each purpose must have its own lawful basis and be clearly documented.

- Configurable Defaults: Provide tenant-level toggles like, “Allow my data to be used for global AI model improvement: Yes/No.” Defaults should be conservative (opt-out by default) unless you have a strong justification.

- Traceability: Log which data was used in which training job, under which legal basis, and for which tenant. This is crucial for audits and data subject requests.

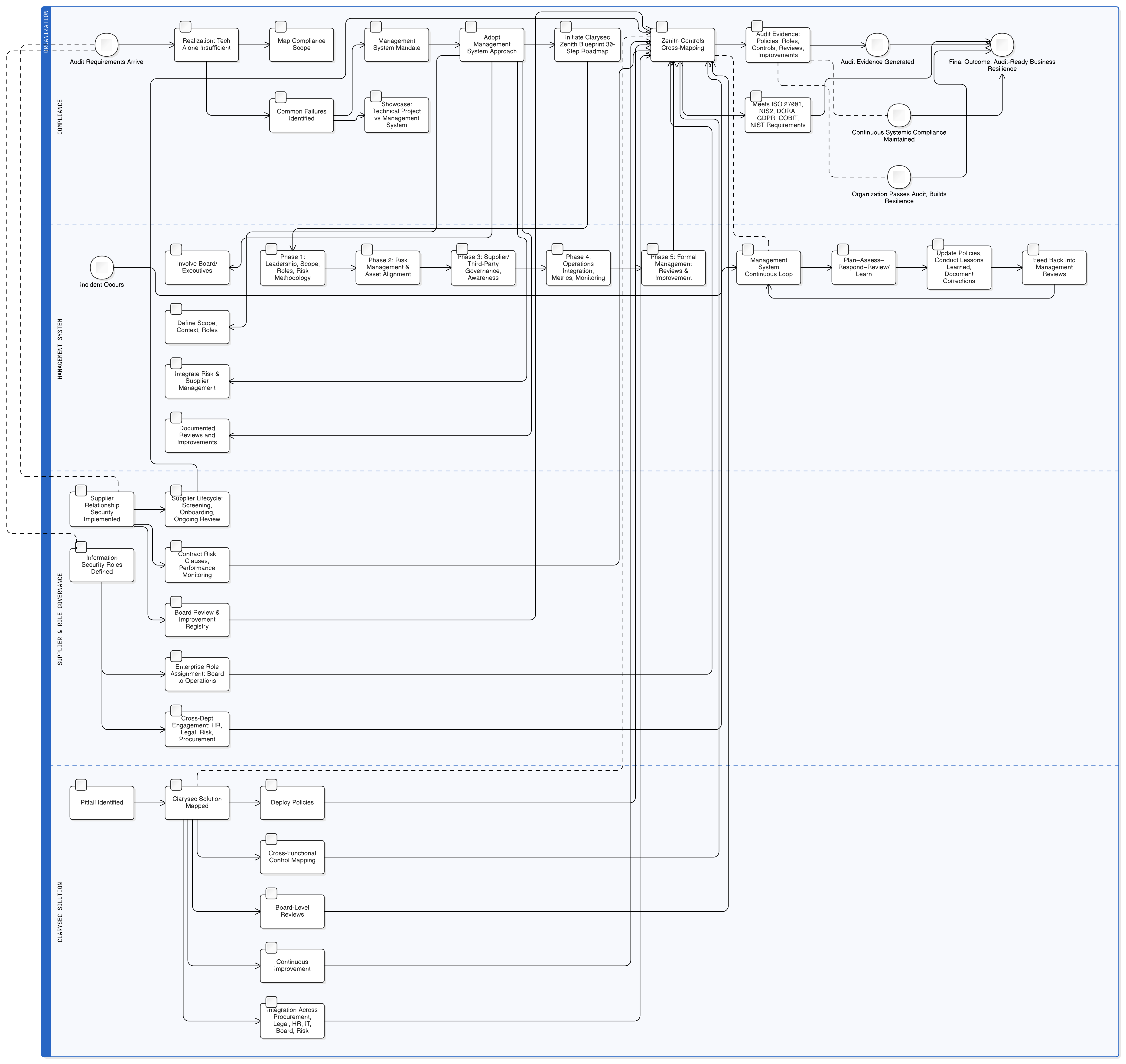

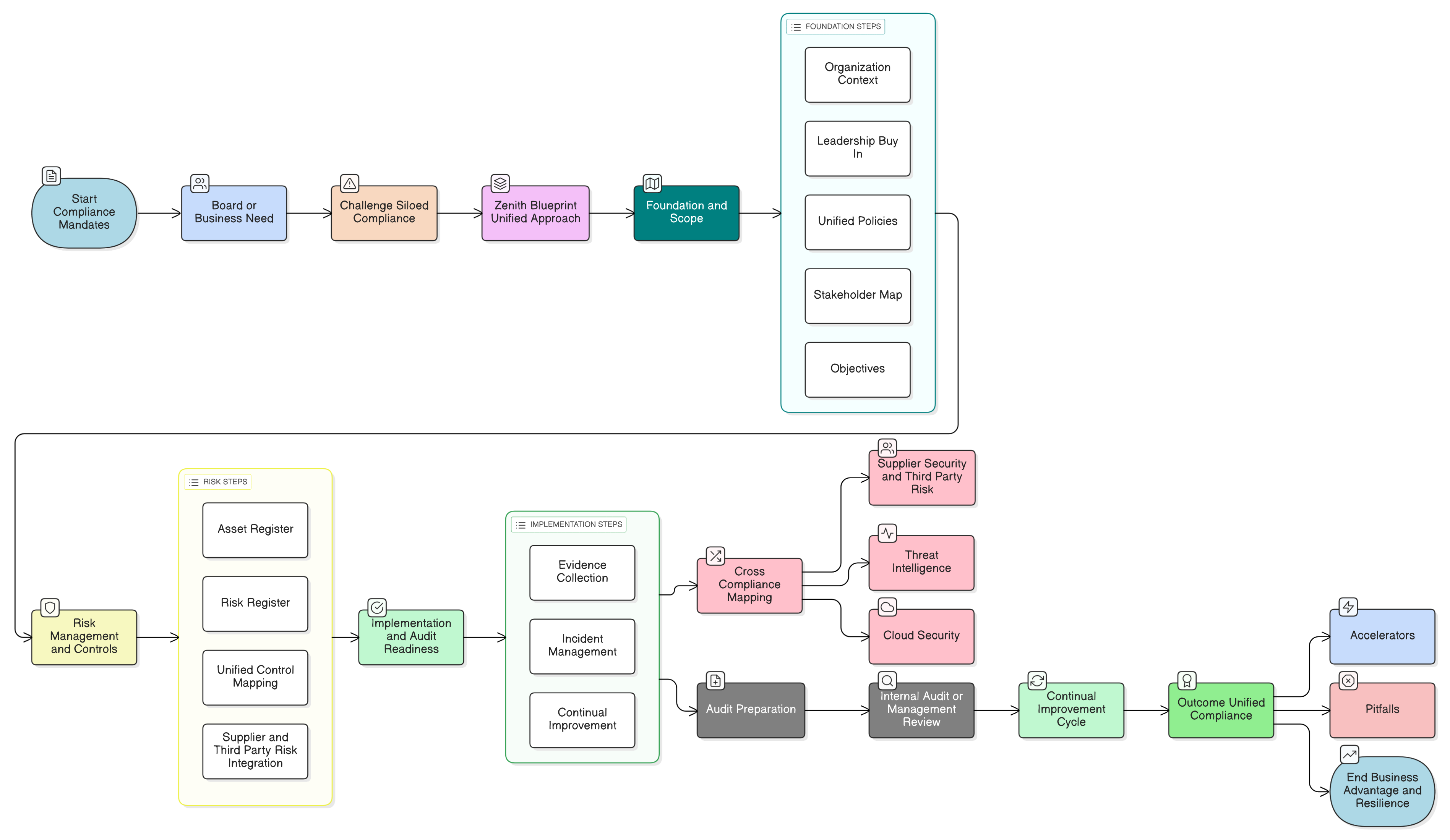

Clarysec’s Zenith Blueprint: An Auditor’s 30-Step Roadmap provides a structured path for embedding these requirements long before you write a single line of code. It starts with governance:

- Foundation Phase, Step 2: Understanding Interested Parties: This step forces you to identify all stakeholders, including EU regulators. As the Zenith Blueprint notes, their requirements include “lawful processing of personal data, breach reporting in 72h, [and] data subject rights.”

- Audit & Improvement Phase, Step 24: Build and Maintain a Legal and Regulatory Requirements Register: Work with legal teams to create a central repository of all applicable laws, understanding how GDPR, NIS2, DORA, and others intersect with your AI security posture.

With this foundation, you can move to technical implementation with confidence.

Securing the Fuel: Lawful and Minimal Training Data

The most fraught question in AI compliance is simple: “Can we use customer data to train our models?”

The answer lies in a multi-layered strategy centered on lawful basis, data minimization, and technical safeguards like pseudonymization.

Lawful Basis and Transparent Purpose

Per ISO/IEC 27701, you must identify and document your processing purposes and establish a lawful basis for each.

- For Feature Delivery (e.g., AI search within one tenant): The lawful basis is typically performance of a contract or legitimate interest. This must be documented in your Record of Processing Activities (RoPA).

- For Global Model Improvement (across tenants): This often requires explicit consent or a very carefully justified legitimate interest with a clear and easy opt-out mechanism. Transparency in your privacy notice and product UI is non-negotiable.

Technical Safeguards: Pseudonymization and Masking

True anonymization is difficult to achieve without destroying data utility. A more practical and GDPR-endorsed approach is pseudonymization: replacing personal identifiers with artificial ones. This minimizes risk while retaining the data’s value for model training.

This process is a core control. In the Zenith Blueprint, Step 20 specifically addresses data masking, directly tying it to GDPR’s Article 25 and 32 principles. It’s a required security measure, not just a good idea.

Clarysec’s Data Masking and Pseudonymization Policy operationalizes this by assigning clear responsibility:

“The DPO shall validate compliance with GDPR pseudonymization criteria and coordinate with Legal on any regulatory disclosure requirements related to data breaches or masking control failures.”

- From section ‘Enforcement and Compliance’, policy clause 8.4.

For your development teams, this means implementing automated scripts to mask or pseudonymize names, emails, phone numbers, and other direct identifiers before the data ever enters the training environment. It also means establishing a formal validation process with your DPO to ensure the technique is robust.

The Hidden Threat: Securing Test Data and AI Experiments

Real data breaches rarely start in a glossy, hardened production environment. They begin in the forgotten corners of your infrastructure:

- “Safe” staging environments with poorly sanitized copies of production data.

- “Temporary” CSV exports of customer data sent to ML engineers for local experiments.

- QA scripts that use raw user content to test LLM prompts.

This is precisely where the nightmare scenario from our introduction began. Clarysec’s Test Data and Test Environment Policy-sme speaks directly to this risk:

“Comply with relevant data protection regulations (e.g., GDPR, NIS2) by ensuring all test data is processed lawfully, fairly, and securely.”

- From section ‘Objectives’, policy clause 3.4.

Your policy must be backed by practical controls. No production PII should ever exist in non-production environments without robust masking or pseudonymization. Test environments should use separate, lower-privilege LLM API keys with strict rate limits. And it must be an explicit rule that test prompts never include live customer identifiers.

Fortifying the Core: Granular Access Control for AI Pipelines

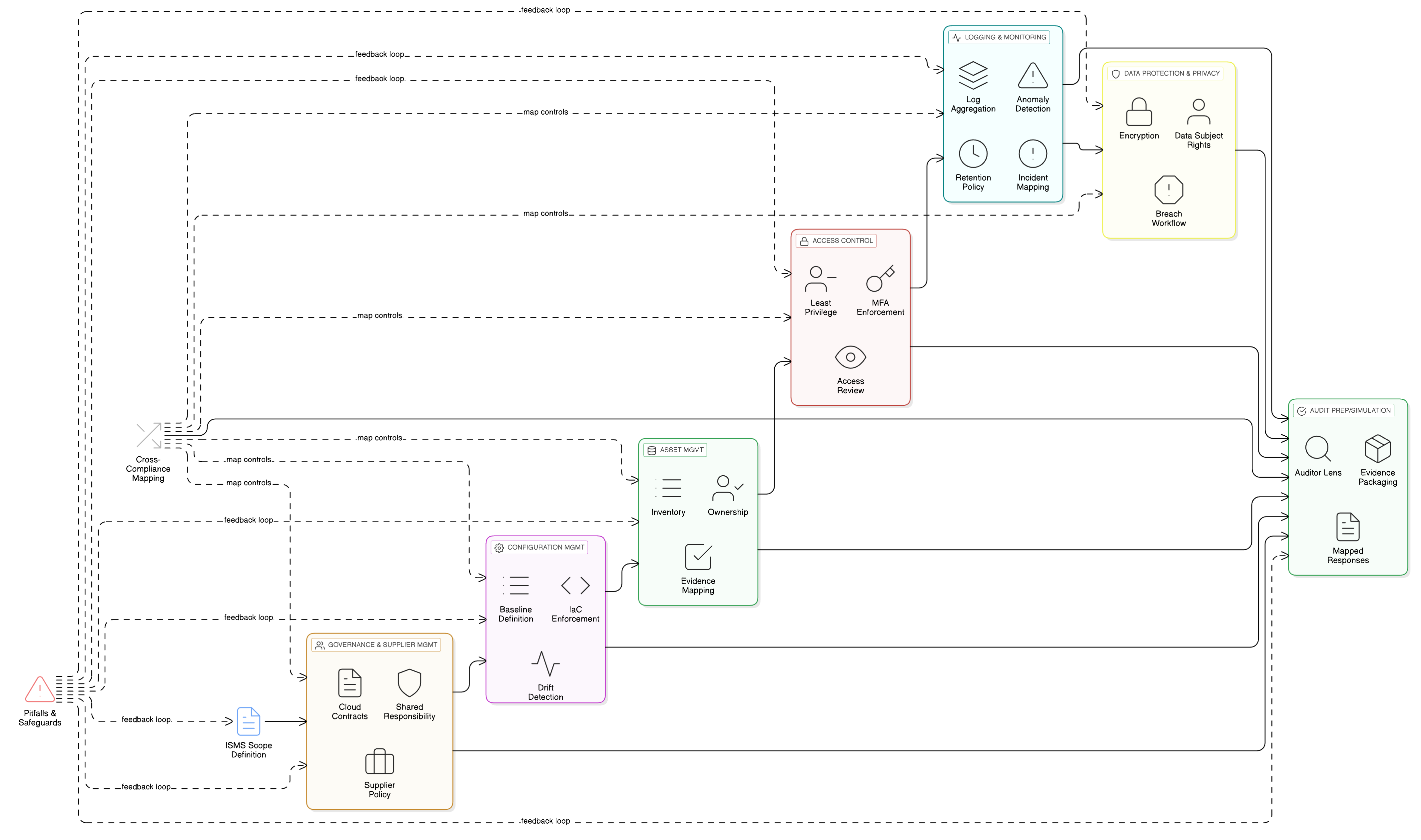

LLM features sit atop your most sensitive data stores, logs, and training pipelines. Foundational access control is therefore paramount for GDPR compliance. ISO/IEC 27001:2022 controls 8.3 and 8.2 are the pillars of your defense. Clarysec’s Zenith Controls: The Cross-Compliance Guide provides the blueprint for implementing them effectively.

ISO/IEC 27001:2022 Control 8.3: Information access restriction

This control is about ensuring access to information is granted on a strict “need-to-know” basis. For an LLM training environment, this means your data scientists, ML engineers, and the automated processes themselves should only have access to the specific data they require, and nothing more.

As detailed in Zenith Controls, this is deeply connected to other controls:

- Ties to 5.9 (Inventory of information and other associated assets) and 5.12 (Classification of information): You cannot restrict access if you don’t know what data you have and how sensitive it is. Your AI training dataset must be inventoried and classified as highly confidential, a process governed by your Data Classification and Labeling Policy-sme.

- Ties to 8.5 (Secure authentication): Access restrictions are meaningless without strong identity verification. Every user and service account accessing the training data must be authenticated securely, preferably with MFA.

ISO/IEC 27001:2022 Control 8.2: Privileged access rights

Your ML engineers, SREs, and data scientists need elevated access. These privileged accounts are “keys to the kingdom” and prime targets. Control 8.2 mandates that these rights are managed with extreme prejudice.

According to Zenith Controls, the key relationships are:

- Ties to 8.15 (Logging) and 8.16 (Monitoring activities): All privileged activity must be logged and monitored. If a data scientist suddenly tries to export the entire training dataset, an alert needs to fire immediately.

- Ties to 6.7 (Remote working): If your AI team works remotely, their privileged access must be funneled through secure, monitored channels like a VPN with strict session controls.

The Auditor’s Perspective: How to Prove Your AI Controls are Working

Implementing controls is only half the battle. You must prove their effectiveness. Different auditors, trained in different frameworks, will look for specific evidence.

| Auditor Type | Framework Focus | What They Will Ask For (Evidence) |

|---|---|---|

| ISO/IEC 27001 Auditor | ISO/IEC 27007:2020 | Show me your access control policy for the AI training environment. Provide logs from your access review process for the past 12 months. Demonstrate how a new ML engineer is provisioned with least-privilege access. |

| COBIT Auditor | COBIT 2019 (DSS05) | I need to see your role-based access control (RBAC) matrix for the data science team. Provide reports from your monitoring tools that show alerts for anomalous access attempts to the training data lake. |

| NIST Assessor | NIST SP 800-53A (AC-3, AC-6) | Let’s review the system configuration for the servers hosting the training data. I want to verify that the access control lists (ACLs) technically enforce the policies you’ve documented. Show me evidence that privileged sessions are terminated after inactivity. |

| GDPR/Privacy Auditor | ISO/IEC 27701:2021 | Provide your Data Protection Impact Assessment (DPIA) for the AI feature. Show me the consent records for the data subjects whose information is in the training set. How do you process a “right to erasure” request for data within a trained model? |

Implementing controls 8.2 and 8.3 correctly has broad benefits. Zenith Controls shows a direct mapping to requirements in GDPR (Articles 5, 25, 32), NIS2 (Article 21), DORA (Article 10), and NIST SP 800-53 (AC-3, AC-6), allowing you to satisfy multiple frameworks with a single, unified control implementation.

The ‘Right to be Forgotten’ Paradox: Managing Data Subject Rights in AI

GDPR’s Article 17, the “right to erasure,” presents a unique technical challenge for AI. How can you delete a person’s data once it has been used to train a massive, complex model? It’s often technically infeasible to “unlearn” specific data points.

This is where your initial design choices become your best defense. There is no single perfect answer, but practical, defensible strategies include:

- Pseudonymization First: If the training data was properly pseudonymized, the link to the individual is already severed in the training corpus. You can then delete the personal data from source systems and the link in the pseudonymization key table.

- Data Segregation for Training: Where possible, keep per-tenant training datasets separate. This makes data removal feasible without retraining your entire model universe.

- Scheduled Model Retraining: Your DPIA should address this risk. The mitigation may be a commitment to periodically retrain the model from scratch using a refreshed dataset that excludes data from users who have requested erasure.

The Zenith Blueprint’s section on information deletion (Step 20, covering control 8.10) explicitly links this technical capability to GDPR Articles 17 and 5(1)(e), requiring verifiable processes to securely wipe data when it’s no longer needed.

Securing Your AI Supply Chain: Outsourced Development and Third-Party LLMs

Few SaaS companies build everything in-house. You might use a hyperscaler’s LLM API or contract an outsourced development partner. This introduces supply chain risk.

The Zenith Blueprint, in Step 22 on Outsourced Development, highlights this risk and its connection to GDPR Articles 28 and 32. As the blueprint states:

“One often-overlooked area is training and awareness. Your outsourced developers may be competent, but are they trained in secure coding practices? Are they familiar with your policies? Are they aware of the compliance frameworks you must follow, GDPR, DORA, NIS2…?”

For any external LLM provider or development partner, your due diligence is critical. Your Data Processing Addendum (DPA) must explicitly cover AI-related processing purposes, data categories, and prohibitions on the provider using your data for their own model training. You must verify that they implement security measures aligned with GDPR Article 32. Your AI supply chain must be as auditable as your core infrastructure.

From Theory to Practice: A Concrete Example of a GDPR-Ready AI Feature

Let’s make this concrete. Imagine you are adding an AI assistant that summarizes customer support conversations, suggests reply drafts, and learns from previous tickets to improve.

Here is a practical implementation pattern using Clarysec’s toolkit:

- Classification and Labeling: All support tickets are classified as “Confidential” under your Data Classification and Labeling Policy-sme, aligning with GDPR and DORA data handling obligations.

- Masking Before the LLM: A masking service intercepts the data before it’s sent to the LLM. It strips or replaces names, emails, phone numbers, and other PII. This entire process is governed by the Data Masking and Pseudonymization Policy, with the DPO validating the methodology.

- Access Controls for Prompts and Logs: Only authorized roles (e.g., AI Product Owner) can access raw prompt logs. This is implemented using ISO 27001:2022 control 8.3 (information access restriction) for general access and control 8.2 (privileged access rights) for any admin-level visibility, as mapped out in Zenith Controls.

- Consent for Training Data Corpus: The training pipeline ingests only the masked data. A tenant-level configuration setting, “Allow my masked data to be used for global AI model improvement: Yes/No”, is provided, defaulting to “No.”

- Retention and Deletion: Prompt logs are retained only as long as necessary. When a tenant disables the feature or terminates their contract, a workflow is triggered to securely delete or anonymize related AI logs and training entries, following the process outlined in your Zenith Blueprint implementation for control 8.10 (Information deletion).

When auditors arrive, you can walk them through the feature’s data flow diagrams, the specific policies that govern it, and the technical evidence from your systems, access logs, job configurations, and erasure workflows. You are proving compliance in action.

Your Action Plan: From Ad-Hoc to Audit-Ready AI

You don’t need to rip apart your product, but you do need a structured, defensible approach. Here is a concise action plan:

- Inventory AI Use Cases and Data Flows: Identify every place LLMs are used, customer-facing features, internal tools, and experiments. Map what data goes where, under which legal basis, and who has access. Use the Zenith Blueprint’s Foundation phase to ensure your legal register covers all AI-related GDPR, NIS2, and DORA requirements.

- Establish Governance First: Before building, perform a Data Protection Impact Assessment (DPIA) for each AI feature. Document its purpose, lawful basis, and risks. Deploy foundational policies like the Data Protection and Privacy Policy-sme and Information Security Policy-sme.

- Lock Down Data and Access: Implement robust technical controls. Adopt the Data Masking and Pseudonymization Policy and the Test Data and Test Environment Policy-sme. Use Zenith Controls to implement and document ISO 27001:2022 controls 8.2 and 8.3 for all AI data stores and pipelines.

- Embed Data Subject Rights into AI Workflows: Update your DSAR and deletion procedures to include AI-related data. Document your strategy for handling erasure requests in the context of trained models, focusing on pseudonymization and model retraining schedules.

- Bring Your AI Supply Chain Under Control: Update DPAs with third-party LLM providers and outsourced developers. Ensure contracts explicitly forbid unauthorized data use and require strong security measures. Verify that external teams are trained on your data handling policies.

Unlocking Innovation with Confidence

The intersection of AI and GDPR is the new frontier of compliance. By adopting a structured, risk-based approach, you can unlock the transformative power of artificial intelligence without compromising your commitment to data protection and privacy.

Clarysec provides the map, the tools, and the expertise to guide you on that journey. Using:

- Zenith Blueprint: An Auditor’s 30-Step Roadmap for a phased implementation of GDPR-aligned controls for AI.

- Zenith Controls: The Cross-Compliance Guide to unify ISO 27001:2022 controls with GDPR, NIS2, DORA, and NIST requirements.

- Production-ready policies like the Data Protection and Privacy Policy-sme, Data Masking and Pseudonymization Policy, and Test Data and Test Environment Policy-sme to codify your rules and satisfy auditors.

You can move from ad-hoc AI experiments to an audit-ready AI capability that inspires confidence in regulators, auditors, and demanding enterprise customers. You can keep innovating with LLMs and still sleep at night.

If you are planning or running AI features in your SaaS product, your next step is straightforward. Download our toolkit samples or book a demo to see how Clarysec can help you build an AI program that is not just powerful, but also proven to be private and secure by design.

Frequently Asked Questions

About the Author

Igor Petreski

Compliance Systems Architect, Clarysec LLC

Igor Petreski is a cybersecurity leader with over 30 years of experience in information technology and a dedicated decade specializing in global Governance, Risk, and Compliance (GRC).Core Credentials & Qualifications:• MSc in Cyber Security from Royal Holloway, University of London• PECB-Certified ISO/IEC 27001 Lead Auditor & Trainer• Certified Information Systems Auditor (CISA) from ISACA• Certified Information Security Manager (CISM) from ISACA • Certified Ethical Hacker from EC-Council